Category: Future

Are you boxed in? Getting to beyond professional roles and job titles

Every time I have had to look for a job, I have found myself having to box myself into an application template or a job spec. To cover every possible instance, I used to make a couple of CVs, tweak my LinkedIn, produce multiple portfolios and a few different cover letters as standard procedure. I’m not the only one.

I have never walked the acceptable route. At university, I studied two specialties, rather than one, which was the default school scheme. I was into coding, which almost no one else was, which meant I had the wonderful chance to work for my teachers and learn their design practices while I applied the code to make them a reality. It was very clear to me then that design is not enough to make things real, and it was only later I learnt that development and design are also not enough: I had to learn more about business.

I don’t believe in linear growth; I believe in exponential growth (like a startup). I believe cross-disciplinary teams are strong, and the same is true for cross-disciplinary people. However, it’s not easy to convince people of that. I live in hope that the new world will see these kinds of people as Da Vincis, rather than as “unfocused”, which seems to be the typical response.

Titles are misleading

When filling in an application template, or writing your CV, we’ve been taught that titles are important. I believe titles are worthless, because they don’t trust indicators of what people do. It’s better to rely on descriptions of work, and even better to have references. What’s good about titles is that the discipline is encapsulated in the title. It’s easy to tell the orientation or that person, or which profession they belong to. Still at the end, what counts and what’s trusted is only real feedback from trusted contacts.

Changing jobs

Sixty percent of people claim they would change a career if they could start over. and 51% of twenty-somethings already regret their career choice (Source: School of Life, 2008).

People might think that a change in someone’s career perhaps suggest that they weren’t good at the previous role, or that they are unfocused, but I strongly disagree. There are many people who transition from another discipline to design and vice versa.

According to the Bureau of Labor (2017) people change jobs an average of 12 times throughout their working life, which means they usually stay in each job an average of 4.2 years. In addition, in the early years 25-35 of the career trajectory, each role lasts around 2.5 years and when they reach higher roles it’s around six years.

Changing a job is not the same as changing a career

One in every 10 people in the UK considers changing their career (Guidance Council, 2010). According to the 2015 and 2016 data from the Current Population Survey, about 6.2 million workers (4 percent of the total workforce) transferred from one occupational group to another. Are we making it easy for them to do that? And why do they want to do it? And another question is; why do so many people think that changing career is ‘madness’, whereas changing your job is quite ordinary?

Why not stay in a ‘career’ and be an ‘expert’?

I am a musician, but like many others, most of us are not on stage alongside Beyonce. Most of us don’t even make money out of music. We’re hobby-professional musicians who do other things for a living, but we still maintain our love and understanding of music. Some might argue it’s a purer way, one that reflects our truth, freed from ‘the industry’ so our music can be loved by people who really value it. Am I better than Jay-Z? Probably not. Would I be if I would have done it 100% of my time? Maybe. Would I be as successful as he is? Who knows.

Granted, making money out of music is harder than most of every other profession, but is making money out of your skills enough? Or is music more about making your own ‘art’, and surely the amount of people who get paid for that is tiny! Even if you can make money out of something you love, surely you’ll have aspirations, to grow bigger, to learn new things and not to do the same stagnant thing over and over again.

Most people can’t be experts

My point is, to be an expert is hard and only 2% of people in each field can achieve that level of mastery while others suffer from boredom (after 2 years doing the same job), lack of challenge and lack of progression. This leads to mediocrity, and eventually redundancy at the age of 55 when that person can’t find a job anymore, because they don’t have other skills and they are so worn out that they haven’t learned anything new professionally in the past 10 years.

And this leads me to say – once an expert, not always an expert. People move on, time doesn’t freeze and people who once were the tip of the spear could just as well now be stuck in the ground somewhere. The only thing that lives is organic growth. How many people made a one hit wonder, had a short career as a model, launched a successful startup and then had many other failures after? Most! I applaud the lessons of history and learning from biographies and stories, but it’s an over-simplified admiration for a set of events that can never happen again.

The only things that people like to do again and again are things that were designed to make us addicts or ones that are instrumental to our survival.

So, when you’re searching for a new role and you feel like going cross-disciplinary, but are worried about how an employer is going to view your career so far, and how you’re going to deal with the questions about why you haven’t stuck in one role for a decade or more, take a look at these questions and think about how you might answer them.

Here are some questions worth asking yourself

- How long do you think it takes to master something?

- What happens to mastery if you neglect it and go and do something else for a while?

- What is the difference between being 80% good at many things, rather than being 100% good at just one thing?

- How does it feel to do many things (same things) for a long time?

- How does my 100% compare with some other person’s 100%?

Within a person’s work life the two main key problems are interest and progression. Interest is hard to maintain now that we work for 55 years on average. It’s impossible to be interested in the same field for that long. Progression is another stumbling block, because within those 55 years the thing that keeps the best people alive is constant change and challenge. And, since challenges grow as you rise up the ladder, the compensation should align with it. Not only because the cost of living is rising, but mainly because it’s the main way to drive human beings in a positive way.

Not everyone is ambitious. Some people stay unhappy and won’t do anything about it. Some will always be unhappy no matter what happens or what they do. But in a workplace, when you see these amazing people that drive your business forward, the entrepreneur or super dedicated employee, you should help them. Help them to learn more, help them change career. Be open to someone with a CV that shows a career change. You might think it’s better for your business to have someone who did something all of their life, but the time span of doing a particular job has nothing to do with the quality of the output. It’s better having diversity in a business, with people from all kinds of backgrounds, rather than disqualifying them. Humans have a desire to evolve, and that’s the benefits of that are what you’ll get if you hire a person who doesn’t neatly fit into a box.

Facial recognition as a UX driver. From AR to emotion detection, how the camera turned out to be the best tool to decipher the world

The camera is finally on stage to solve UX, technology and communication between us all. Years after the Kinect was trashed and Google Glass failed, there is a new hope. The impressive technological array that Apple minimized from a PrimeSense to the iPhone X is the beginning of the way for emotion dependent interactions. It’s not new, it’s better than that, it’s commercialized and comes with access to developers.

Recently Mark Zuckerberg mentioned that a lot of Facebook’s focus will be on the camera and its surrounding. Snapchat have defined themselves as a camera company. Apple and Google (Lens, Photos) also are heavily investing in cameras. There is a tremendous power in the camera that is still hidden and it’s the power to detect emotions.

Inputs need to be easy, natural and effortless

When Facebook first introduced emojis as an enhanced reaction to the Like I realised they were onto something. Facebook chose five emotions that would be added and essentially help them understand emotional reactions to content better. I argued that it is essentially a glorified form of the same thing; but one that works better than anything else. In the past Facebook only had the Like button while YouTube had the Like and Dislike buttons. But these are not enough for tracking emotions, and cannot bring too much value to researchers and advertisers. Most people expressed their emotions in comments, and yet there were more likes than comments. The comments are text based, or even image/Gif which is harder to analyze. That is because there are many contextual connections the algorithm needs to guess. For example: how familiar is that person with the person he reacts to and vice versa? What’s their connection with the specific subject? Is there sub text/slang or anything related to previous experience? Is that a continued conversation from the past? Etc. Facebook did a wonderful job at keeping the conversation positive and prevented something like the Dislike button from pulling focus, which could have discouraged content creators and shares. They kept it positively pleasant.

Nowadays I would compare Facebook.com to a glorified forum. Users can reply to comments, like (and other emotions). We’ve almost reached a point where you can like a like 😂. Yet it is still very hard to know what people are feeling. Most people that read don’t comment. What do they feel while reading the post?

The old user experience for cameras

What do you do with a camera? Take pictures, videos, and that’s about it. There has been huge development in camera apps. We have many features there that are related to the surroundings of the main use case; things like HRD, Slow mo, portrait mode etc.

Based on the enormous amount of pictures users generated there was a new wave of smart galleries, photo processing, and metadata apps.

However, there has been a change in the focus recently towards the life integrated camera. A stronger combination of the strongest treats and best use cases for what we do with mobile phones. The next generation of cameras will be fully integrated with our lives and could replace all these other input icons in a messaging app (microphone, camera, location).

It is not a secret that cameras were amongst the three main components that have been constantly developed at a dizzying pace: The screen, the processor, and the camera. Every new phone that came out pushed the limits of that year after year. For cameras, the improvements were in the realm of megapixels, movement stabilization, aperture, speed and, as mentioned above, the apps. Let’s look at a few products that were created by these companies to evaluate the evolution that happened.

Most of the development focused on the back camera because, at least initially, the front camera was perceived to be used for video calls only. However, selfie culture and also Snapchat changed it. Snapchat’s masks, which were later copied by everyone else, are still a huge success. Face masks weren’t new, Google introduced them way back, but Snapchat was more effective at putting them in front of people and growing their use.

Highlights from memory lane

In December 2009 Google introduced Google Goggles, which was the first time that users could use their phone to get information about things that are around them. The information was mainly about landmarks initially.

In November 2011 on the Samsung Nexus, they introduced facial recognition to unlock the phone for the first time. Like many other things that are done for the first time, it wasn’t very good and therefore scrapped later on.

In February 2013 Google released Google Glass which had more use cases because it was able to receive additional input other than just from the camera, like voice. It was also always there and present but it essentially failed to gain traction because it was too expensive, looked unfashionable, and triggered an antagonistic backlash from the public. It was just not ready for prime time.

Devices so far only had a limited amount of information available at their disposal. It was audio visual with GPS and historical data. But it was limited: Google Glass displayed the information on a small screen near your eye which made you look like an idiot looking at it and prevented you from looking at anything else. I would argue that putting such technology on a phone for external use is not just a technological limitation but also a physical one. When you focus on the phone you cannot see anything else, your field of view is limited, similar to the field of view in UX principles for VR. That’s why there are some cities that make routes for people who are on their phone and traffic lights that help people not to die while walking and texting. A premise like Microsoft’s Hololens is much more aligned with the spatial environment and can actually help users interact rather than absorb their attention and put them in danger.

In July 2014 Amazon introduced the Fire Phone. It featured four cameras at the front. This was a breakthrough phone in my opinion; even though it didn’t succeed. The four frontal cameras were used for scrolling once the user’s eyes reached the bottom, and created 3D effects based on the accelerometer and user’s gaze. It was the first time that a phone used the front camera as an input method to learn from users.

August 2016 Note 7 was launched with iris scanning that allows users to unlock their phones. Samsung resurrected an improved facial recognition technology that rested on the shelf for 6 years. Unfortunately just looking at the tutorial is vexing. Looking at it made it clear to me that they didn’t do too much user experience testing for that feature. It is extremely disturbing to hold this huge phone and put it exactly 90° parallel to your face. I don’t think it’s something anyone should do in a street. I do understand it could work very nicely with Saudi women who have covered their faces. But the Note 7 exploded, luckily not in people’s faces while doing iris scanning or VR, and this whole concept waited for another full year until the Note 8 came out.

By that time no one mentioned that feature. All it says is that it’s an additional way of unlocking your phone in conjunction with the fingerprint sensor. My guess is that this is because it’s not good enough or Samsung wasn’t able to make a decision (similarly to the release of the Galaxy 6 and 6 Edge). Similarly, for something to succeed it needs to have multiple things you can do with it, otherwise, it risks being forgotten.

Google took a break and then in July 2017 they released the second version of Glass as a B2B product. The use cases became more specific for some industries.

Now Google is about to release the Google Lens to bring the main initial Goggles use case to the modern age. It’s the company’s effort to learn more about how to use visual with additional context, and to figure out the next type of product they should develop. It seems that they’re leaning towards a camera that is wearable.

There are many others that are exploring visual input as well. For example, Pinterest is seeing huge demand for their visual search lens and they intend to use it for searching for familiar things to buy and to help people curate.

Snapchat’s spectacles that allow users to record short videos so easily (even though the upload process is cumbersome).

Now Facial Recognition is also on the Note 8 and Galaxy 8 but it’s not panning out as well as we’d hoped it would.

Or https://twitter.com/MelTajon/status/904058526061830144/video/1

Apple is known for being slow to adopt new technology in relation to its competitors. But on the other hand, they are known for commercializing them. Like, for example, the amount of Apple Watches they sold in comparison to other brands. This time it was all about facial recognition and infinite screen. There is no better way of making people use it than removing any other options (like the Touch ID). It’s not surprising, last year they did this with Wireless Audio (removing the headphone jack) and USB C on the MacBook Pro (by removing everything else).

I am sure that there is a much bigger strategic reason to why Apple chose this technology at this specific time. It’s to do with their AR efforts.

Face ID has some difficulties that immediately occurred to me, like Niqāb (face covers) in Arab countries, plastic surgery and simply growing up. But the bigger picture here is much more interesting. This is the first time that users can do something they naturally do with no effort and receive data that is much more meaningful for the future of technology. I still believe that a screen that can completely read your fingers anywhere is a better way, and it seems like Samsung is heading in that direction (although rumors claimed that Apple tried to do it and failed).

So where is this going? What’s the target?

In the past, companies used special glasses and devices to do user testing. The only output they could give in regards to focus were Heat Maps – using the mouse they were looking at interactions and they were looking out where people physically look. Yet they weren’t able to document users’ focus, emotions, and how they react to the things they see.

Based on tech trends it seems like the future involves Augmented Reality and Virtual Reality. But in my opinion, it’s more about Audio and 3D Sound, and Visual Inputs; gathered simultaneously. This would allow a wonderful experience such as being able to look anywhere, at anything and get information about it.

What if we’d be able to know where the users are looking, where their focus is? For years this is something that Marketing and Design professionals have tried to capture and analyze. What can be better to do that than the set of arrays a device like the iPhone X has as a starting point? Later on, this should evolve into glasses that can see where the user’s focus is.

Reactions are powerful and addictive

Reactions help people converse, raise retention and engagement. Some apps offer post reaction as a message that one can send to their friends. There are some funny videos on YouTube of reactions to a variety of videos. There is even a TV show dedicated solely to people watching TV shows called Gogglebox.

In Google, IO Google has decided to open the option to pay creators on its platform, kind of like what the brilliant Patron site is doing but in a much more dominant way. A way that helps you as someone from the crowd to stand up and grab the creator’s attention called SuperChat.

https://www.youtube.com/watch?v=b9szyPvMDTk

I keep going back to Chris Harrison’s student project from 2009. In this he created the keyboard that has pressure sensing in the keys and if you type strongly it basically read your emotions that you’re angry or excited and the letters got bigger. Now imagine combining it with a camera that sees your facial expression and we all know people express their emotions whilst they’re typing something to someone.

https://www.youtube.com/watch?time_continue=88&v=PDI8eYIASf0

How would such UX look?

Consider the pairing of a remote and the focus center point in VR. The center is our focus but we also have a secondary focus point, which is where the remote points. However, this type of user experience cannot work in Augmented Reality, well unless you want everything to be very still and walk around with a magic wand. To be able to take advantage of Augmented Reality, which is one of Apple’s new focuses, they must know where the user’s focus lies.

What started as AR Kit and Google’s ARCore SDKs will be the future of development not only because of the amazing output, but also because of the input that they can get from the front and back cameras combined. This will allow for a greater focus on the input.

A more critical view on future developments

While Apple opened the hatch for facial recognition to trigger reactionary Animojis it is going to get much more interesting when others start implementing Face ID. Currently, it is manifested in a basic harmless way, but the goal remains to get more information! Information that will be used to track us, sell to us, learn about us, sell our emotional data and to allow us to have immersive experiences nonetheless.

It is important to say that the front camera doesn’t come alone, it’s finally the expected result of Apple buying PrimeSense. The array of front-facing technology includes an IR camera, depth sensor etc. (I think they could do well with a heat sensor too). It’s not necessarily that someone will keep videos of our faces using the phone, but rather there will be a scraper that will document all the information about our emotions.

Summary

It is exciting for Augmented Reality to have algorithms that can read our faces. There have already been so many books about identifying people’s facial reaction, but now it’s time to digitize that too. It will be wonderful for many things. For example, robots that can look and see how we feel and react to those emotions, or on our glasses to get more context for what we need them to do. Computationally it’s better to look at the combination of elements because that is the thing that creates the context that helps the machine understand you better. It could be another win for companies that have ecosystems which can be leveraged.

The things you can do if you know what the user is focusing on are endless. Attaining that knowledge is the dream of every person that deals with technology.

Goodbye Stereo, hello 360º Sound

In the past five years, there has been a paradigm shift in the speakers market. We’ve started seeing a different form factor of audio capable devices, 360-degree audio speakers, emerging. I want to have a look at the reasons behind the appearance of this form factor and the benefits it brings us.

First, it is important to look at the market segmentation reasoning:

1. Since the inclusion of Bluetooth in phones there has been a variety of (mainly cheap, initially) speakers that sought to abolish the need for cables. Docs and Bluetooth speakers were the answer. But at the time there was no premium solution for Bluetooth speakers and besides sound quality, there was room for more innovation (or gimmicks, like a floating speaker). To luxuriate the Bluetooth speaker one of the solutions that were created was a 360° speaker.

The original Bluetooth speakers were directional speakers and since it is unknown where they will be placed, how many people need to listen to them and where they are sitting; having a directional speaker is a disadvantage in comparison to a 360° one.

2. From another perspective, 360° speakers function as a cheaper alternative to hi-fi audio systems. Many customers are just interested in listening to music in their home in comfort and do not require a whole setup with wires and receivers. They also mainly play music using their mobile phones.

So it fits right in the middle. Now let’s look at some use cases:

Parties — It can be connected to other speakers and have increased sound. It’s also relatively easy for other people to connect to it.

Multi-room — It can allow you to play music whilst controlling it with your phone in all sections of your house. It can also be controlled remotely.

Conference calls — or actually any call. It’s also possible to put it on speaker on your phone but that’s sometimes hard to hear.

Smart — Today we have assistant speakers with arrays of microphones that sometimes come in the form of 360. It’s a bit different but a 360° microphone array is as useful as a speaker array.

I want to focus on the 360° form factor and discuss why it is so important and a real differentiator. To be able to understand more about 360° audio, its advantages and the future of 360° audio consumption, it is important to have a look at the history of sound systems.

The person as sound — Before there were speakers there were instruments. People used their own resonance to make a sound and then found resonance in drums, and string-based instruments. That led to a very close and personal interaction which could be mobile as well. People gathered around a singer or musician to hear them.

Phonograph and Gramophone — This was the first time music became reproducible mechanically. However, it was still mono (one channel). From an interaction perspective, it was a centerpiece with the sound coming out of the horn.

Stereo systems — Stereo was an ‘easy sell’, after all we all have two ears. Therefore speakers that can pleasure them both are fabulous. Some televisions were equipped with mono speakers but more advanced televisions had stereo speakers too.

Surround — 3/5/7.1 systems were introduced mainly for the use case of watching movies in an immersive way. These systems included front, back, center, and sub speakers (sometimes even top and bottom). It is still quite rare to find music recordings that are made for surround. Algorithms were also created for headphones, to mimic surround.

But there is a limitation with these systems. Let’s compare it to the first two reproducible sound systems: the human voice and the Phonograph. They both had more mobility. You could place them wherever you wanted to and people would gather around and listen to music. I can’t say it’s exactly the same experience, but it doesn’t hurt the premise of the instrument. However, with stereo systems and surround systems, you need to sit in a specific contained environment in a specific way to really enjoy their benefits. Sitting in a place where you cannot really sense that spatial experience makes these systems redundant.

Audio speakers in the present

Considering current technologies and their usage, our main music source is our mobile phones. It’s a music source that doesn’t have to be physically connected via cables. Our listening experience is more like a restaurant experience where it’s not important where the audio is coming from as long as it’s immersive. 360° speakers then were able to provide exactly that with fewer speakers. But we lost something along the way, we lost stereo and surround. In other words, we lost the immersive elements of spatial sound.

Audio speakers in the near future

There are huge investments in VR, AR and AI and all of these fields are affecting sound and speakers. In VR and AR we are immersed visually and auditory, currently using a headset and headphones. At home we’ve started controlling it via our voices, turning lights on and off, changing music and so on.

Apple’s HomePod has a huge premise in this respect. Its spatial algorithm could be the basis for incredible audio developments. Apple might have been late to the 360° market but they have tremendous experience in audio and computing and this is why I think this is the next big audio trend: “The spatially aware 360° speaker”.

Although they sell it as one speaker it can obviously be bought in pairs or more. The way these understand each other will be the key to this technology.

Spatiality is important because in a 360° speaker a lot of sound goes to waste, and a lot of power is inefficient. Some of that sound is being pushed against a wall which causes too much reverb. Most of the high frequency that is not being projected at you is useless.

Here are the elements to take into account

- Location in the room — near a wall, in the corner, center?

- Where is the listener?

- How many listeners are there?

- Are there other speakers and where?

In Apple’s demonstration, it seems that some of these are being addressed. It’s clear to see that they thought about these use-cases and therefore embedded their chip into the speaker which might become better over time.

The new surround

360° speakers can already simulate 3D depending on the array of speakers that are inside the hardware shell. This will be reflected in the ability to hear stereo if you position yourself in the right place.

But things get much more interesting if the speaker/s are aware of your location. If you are wearing a VR headset and have two 360° speakers you can potentially walk around the room and have a complete surround experience. A game’s experience could be super immersive without the need for headphones. Projected into AR, a room could facilitate more than one person at a time.

Consider where music is being listened to. In most instances, a 360° speaker would be of greater benefit than a stereo system. In cars, which usually have four speakers, offices and clubs, 360° speakers would work better than a stereo system. Even headphones could be improved by using spatial awareness to block noises from the surrounding environment and featuring a compass to communicate your orientation. Even a TV experience can be upgraded with just HomePods and some software advancements.

What about products like Amazon Echo Show?

A screen is a classic one direction interaction. Until we have 360-degree screens which work like a crystal ball with 360° audio, I don’t see it becoming the next big thing; after all, we still have our phones and tablets.

The future of 360 in relation to creation and consumption tools

Here are a bunch of hopes and assumptions:

- Music production and software will adopt 360° workflows to support the film and gaming industry; similar to 3D programs like Unity, Cinema 4D, and Adobe.

2. New microphones will arise, ones that record an environment using three or more microphones. It will initially start with a way to reproduce 3D from two microphones, like field recorders, but quickly it’ll move into more advanced instruments driven by mobile phones which will adopt three to four microphones per phone to be able to record 360° videos with 360° sound. Obviously, it’ll be reflected in 360° cameras individually as well.

3. A new file type that can encode multiple audio channels will emerge and it will have a way of translating it to stereo and headphones.

I can’t wait to see this becoming reality and having a spatially aware auditory and visual future based on augmented reality, using instruments like speakers or headphones and smart glasses to consume it all.

Here are a couple of companies/articles that I think are related

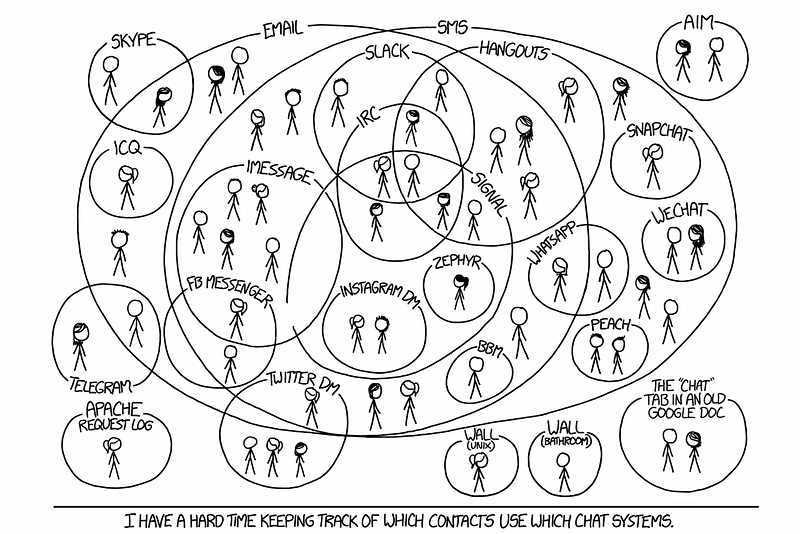

Finished copying Snapchat? What’s next in messaging

In the past year we’ve seen more copying than innovation in the world of messaging. In 2016 everyone did stories, disappearing messages etc. Instead of simply trying to mimic Snapchat there are other useful avenues to explore that can simplify people’s lives and increase engagement. In this article I will analyze key areas in the world of digital conversation and suggest practical examples of how it could be done.

What should be enhanced in messaging:

As users we spend most of our screen time communicating. Therefore there is a need for it to be easy and comfortable; every tap counts.

Part 1

Comfort — The product needs to be easy to adopt, following familiar patterns from real life and aggrandize them.

Rich messaging — Introducing new experiences that help users articulate themselves in unique ways. Charge the conversation with their personality.

Part 2

Automation — Reducing clutter and simplifying paths for repeated actions.

Control — A way for users to feel comfortable; to share more without proliferating vulnerability.

Rich messaging

Being rich in a wider sense means you have a variety of options and most of them are cheap for you. In a messaging UX context, cheap means content that is easy to create; interaction that helps deliver and sharpen the communication. Rich messaging can be embodied in the input and output. It is catered to the user’s context thus facilitating ongoing communication and increasing engagement.

In the past, and currently in third world countries, the inability to send large files including voice messages resulted in many people simply texting. This led more people to learn to read and write. Unapologetically text is harder to communicate with and primitive in terms of technology, hence why users have come to expect more.

Take voice input/output for example. It’s the perfect candidate as there is increasing investment in this area. Speaking is easy, in fact using sounds is the easiest way for humans to assimilate and share information.

There are scenarios where voice can be the preferred communication method, such as when you’re driving, when you’re alone in the room, when you’re cooking, and when you’re doing anything that keeps you relatively busy.

Here are a few examples for rich messaging:

Voice to text — Messaging is asynchronous and to drive engagement the goal is to strive for synchronous. It doesn’t matter how the content was created. What matters is that it is delivered to the other person in a form they can consume now!

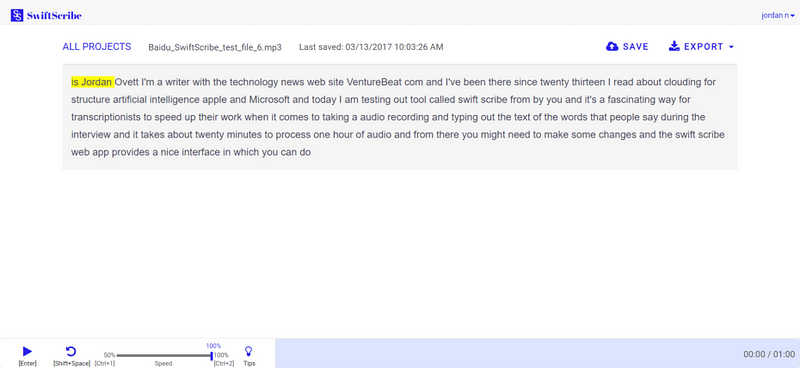

Voice dictation has improved tremendously but somehow most of the focus has been on real-time. The nature of messaging dictates a non-real-time solution. It can be leveraged to give a better quality LPM (Language Processing Model) transcript result. For example, Baidu recently launched SwiftScribe which allows users to upload an audio file that the program transcribes.

Another recent example is Jot Engine, a paid service designed withinterviews in mind. I myself use Unmo and PitchPal to help me prepare for pitches.

They’re not perfect but they take away the guesswork of trying to understand someone in real-time. In a recording, the algorithm can search for context, see what the subject is and rework the language accordingly. I would argue that the result of someone sending you a voice message should be a transcript with an option to listen to the voice as well. As a user, you’d be able to listen to it or just read if you are in an environment that doesn’t allow you to use sound.

In another scenario when a user is in the car they should be able to listen and answer text messages that were sent to them.

Voice context attachment — A good example of this concept is the Livescribe pen. It allows users to write notes that are stored digitally while recording audio to add context. I admit, it’s a more novel idea that won’t be for everyone, but its potential is clear in a professional context.

Metadata — Other than location there are other metadata elements that can be kept with the messages. How fast you type, how strong you press the keyboard, where you are located, who is around you. The possibilities of enhancing the text and providing context are endless and have barely been explored.

It baffles me that no one has done this yet. The cloud actually has more capacity to interpret a message and learn from context. In photos apps like the ones by Google or Samsung, you can see more and more belief in context including location, details of the picture, live photo etc. All these elements should be collected and added when they are relevant.

Engagement and emotional reactions — With services like Bitmoji users can create their own personal stickers. An exaggerated instance of themselves. Seeing this in the world of video would be very interesting. Psychologically these stickers help users to enhance their emotions and share content in a way they wouldn’t if they were communicating in person.

In addition, Masks on Snow (live filters in Snapchat) also helps user express their feelings but at the moment it’s just some weird selection that has a fragment of a context to where they are or what they are doing. You can see how users just post themselves with a sticker not to tell a story but for the fun of it. If they could tell a story or reflect this on reality it would be emotionally usable which will elevate it as a mode of expression.

Comfort of Use

Comfort depends on the way users use the communication. Important relevant detail surfacing and accessible ways of communicating are the backbones of the UX.

Contextual pinning — Recently Slack added an incredible 90s feature “Threads”. If you’ve ever posted in a forum you know that this is a way to create a conversation on a specific topic. Twitter call it stories and Facebook are just using comments. But the story here was the conversion of this feature to the messaging world and that generated a lot of excitement among their users.

However, I see it as just a start. For example let’s say you and a few friends are trying to set up a meeting, and there is the usual trash talk going on while you’re trying to make a decision. Later another member of the group jumps into the thread but finds it really hard to understand. What has been discussed and what has been decided? If users could pin a topic to a thread, and accumulate the results of the discussion in some way it would be incredibly helpful. Then they could get rid of it when that event had passed.

Here is another good example

Accessibility

Image/video to message — instead of just telling a user “Jess sent you a picture or a video” you could actually try to tell the user something useful about that picture/video. Currently most notifications systems are limited to text so why not take advantage of the image processing mechanism that already exists? In other richer platforms you could convert the important pieces of the video to a Gif.

* In that context it’s quite shameful that Apple still doesn’t do bundling of notifications which really helps to tell the story the way it happened without spamming the user. I’m not sure I fancy Android O’s notification groups, but it’s definitely better than creating an endless list of notifications.

Voice type emojis — Keyboards are moving towards predictive emoji and text. They understand that having a different keyboard for emoji is a bit complex for the user, especially since they’re used a lot. A cool way to unify it is to create a language for emoji to make it easier to insert them into messages via voice.

In text translation — We’ve seen improvements from Skype immediate translation and Facebook / Instagram’s “Translate this”. Surely it makes more sense to have this in a chat rather than on the feed? I’m sure not too many people talk to other people in a different language, but if users had such a feature, they might do so. This could also be a great learning tool for companies and maybe it’ll help users to improve the translation algorithm.

See you in Part 2…

Reimagining storage

Storage is everywhere

Look at your house, half of the things there are for storage. Sometimes there is storage for storage like a drawer for small pots that are placed inside pots. In the computer science world they took this metaphor and used it in order to help people understand where things are. Throughout time, new metaphors came like search and ephemeral links. When I was 21 I taught a course called “Intro for computers” to the elderly community, in it I remember explaining how files are structured in folders. I could see how the physical metaphor helped people understand how digital things work.

Unlike the organisation of physical objects in a house, it’s harder to anticipate where things will be in a computer if you weren’t the one who placed them there. Then search came, but still nowadays search is somehow so much better for things online in comparison to our own computers. A lot of research has been done about content indexing for the web. Obviously it was driven by search engine optimisation and the business benefits you get if you reach the top three in the search results page. However, people are not familiar with these methods and don’t apply them to the way they work with files on their devices.

In iOS, Apple chose to eliminate the finder and files in general. Apps replaced icons on a desktop, the App Store replaced thousands of websites and the only folders we actively access on the phone are gallery, videos, and music. Even Android concealed the folders and through Share menus users can probably avoid the transitions that were a norm on computers.

How do we deal with files nowadays?

- We sometimes look them up via search

- We put them in folders, in folders, in folders

- Files sometimes come as attachments to emails or messaging apps.

- We (if advanced users) tag them using words or colors

So here are the problems:

- Search — It exists but indexing needs to happen automatically.

- Self organisation — lacking and not automated in any way.

A new horizon for our contents

Companies see value in holding on to user data, it’s of course stored on the cloud. There, companies can compare, analyze it, do machine learning, and segment it for advertising. Their ability to centralize things helps them to give us a smoother experience by creating an ecosystem.

But storage isn’t just a file or a folder. It has its physical presence, as a file but it could also be an object in real life, a part of a story, a timeline with interactions, challenges and emotions. How can we rate the importance of things and interlink them into past events? How can people relive their day a while ago, black mirror style.

The average person has x amount of files. That is probably x photos, x videos, etc. For many of these there are dedicated software. For music you have players, for photos you have galleries with new search features and event creating features, for video you have your library or Netflix. But what about the rest of the files? What about you Excel sheets, your writings, your projects?

Think of the revolution that happened in the past couple of years around photos. We started having albums, then stories and events. In addition to that we have the elements of sharing, identifying who is there, conquering the world with our pins. In addition to that we have another layer of filters, animations, texts, drawing, emoji. All that is even without speaking about video which needs to adapt these kind of paradigms to create meaningful movies…it’s nicer to share.

If we are looking at the work area there are products that try to eliminate the file by opening different streams of information like Slack or hipChat.

The one thing that these products still lack is the ability to convert them all to a meaningful project, which is attempted by project management software. Project management software tries to display things into different ways which helps covering use cases and needs. However, still most of its innovation is around aggregation, displaying calendars, tasks and reports. Things get complicated when tasks start having files attached to them.

What kind of meaningful file systems can we create?

Imagine the revolution that happened for photos happening for files. Photos already have so much information linked to them like Geolocation, faces, events, text, and the content in it. We finally have pieces of software that has the ability to analyse our files. A good example is Microsoft LipNet project that extracts subtitles from moving lips in video. Imagine the power of AI working on your files. All these revisions, all these old stuff, memories, movies, books, magazines, pdfs, photoshop files, sheets and notes.

How many times have you tried to be organised with revisions, your savings of docs? How many times you’ve created a file management system that you thought would work? Or tried a new piece of software to run multiple things but soon after realised it doesn’t work for everything? It is a challenge that hasn’t been solved in the work environment or the consumer environment.

Some products that are focused on the cloud started implementing seeds of this concept. It usually comes in the form of version control and shared files. It is amazing that people can now work collaboratively in real time. However I think it will spiral to the same problem. There are no good ways to manage files and bring them together into meaningful contextual structure that could once and for all help us get rid of nesting and divided content.

In terms of users navigation there are currently five ways to gain content on the web:

- Search — have a specific thing you’re look for

- Discovery — get a general search or navigation and explore

- Feed — Follow people

- Message — get pushed from outsiders

- URL dwelling and navigation — repeated go in and out

But on the computer with your own creation there are only two

- Search — for specific file and then maybe it is associated by folder

- Folder dwelling — No discovery, just looking around clumsily for your stuff and trying to create a connection or trying to remember.

It’s an early age for this type of service offers

There are some initial sparks of innovation around systems that simplify files. For example looking at version control in Google Drive or Dropbox. The idea that a user can work on the same file with the same filename without even thinking they will lose important information gives comfort. No more file revisions can be a good step in helping us find things in a better way.

Code bases like Github also help control projects with versions control that allows collaborative work, and merging of branches. That is also a good step since it proves that companies are thinking on file projects as a whole. However it still can’t help creating contextual connections or meaningful testing environments.

Final note

AI systems are finally here and the same thing they do on the cloud they can do in our files. We can maybe finally get rid of files the way we think of them and finally be organised, contextual and god forbid, the structure doesn’t have to be permanent, it can be dynamic according to the user needs and the content that is being accumulated every day.

Voice assistance and privacy

Voice assistants technologies are hyped nowadays. However one of the main voiced concerns is about privacy. The main concern about privacy is that devices listen to us all the time and document everything. For example, Google keeps every voice search users do. They use it to improve its voice recognition and to provide better results. Google also provides the option to delete it from your account.

A few questions that come to mind are: how many times do companies go over your voice messages? How often do they compare it with other samples? How often does it improve thanks to it? I will try to assume answers to these questions and suggest solutions.

A good example for a privacy considered approach is Snapchat. Messages in Snapchat are controlled by the user, and they also disappear from the company’s servers. Considering the age target they aimed for, it was a brilliant decision since teenagers don’t want their parents to know what they do, and generally, they want to “erase their sins”. Having things erased is closer to a real conversation than a chat messenger.

Now imagine this privacy solution in a voice assistant context. Even though users aspire the AI to know them well, do they want it to know them better than they know themselves?

What do I mean by that? Some users wouldn’t want their technology to frown upon them and criticize them. Users also prefer data that doesn’t punish them for driving fast or being not healthy. This is a model that is now led by insurance companies.

Having spent a lot of time in South Korea I have experienced a lot of joy rides with taxi drivers. The way their car navigation works is quite grotesque. Imagine a 15-inch screen displaying a map goes blood red with obnoxious sound FX in case they pass the speed limit.

Instead, users might prefer a supportive system that can differentiate between public information that can be shared with the family to private information which might be more comfortable to be consumed alone. When driving a car, situations like this are quite common. Here is an example — A user drives a car and has a friend in the car. Someone calls and because answering will be on the car’s sound system the driver has to announce that someone else is with them. The announcement is made to define the context of the conversation thus to prevent content or behaviors that might be private.

The voice assistant will need to be provided with contextual information so it could figure out exactly what scenario the user is in, and how / when to address them. But we will probably need to let it know about our scenario in some way too. Your wife can hear that you are with someone in the car but can’t quite decipher who with. So she might ask “are you with the kids?”.

Voice = social

Talking is a social experience that most people don’t do when they are alone. Remember the initial release of the bluetooth headset? People in the streets thought that you are speaking to them but you actually were on the phone. Another example is the car talking system. Some people thought that the guy sitting in the car is crazy because he is talking to himself.

Because talking is a social experience we need to be wary of who we speak to and where; so does the voice assistant. I know a lot of parents that have embarrassing stories of their kids “blab” things they shouldn’t say next to a stranger. Many times it’s something that their parent said about a person or some social group. How would you educate your voice assistant? By creating a scenario where you actively choose what to share with it.

Companies might aspire to get the most data possible, but I doubt that they really know how to use it. In addition, it doesn’t correspond with the level of expectations that consumers expect. From the users perspective, they probably want their voice assistant to be more of a dog, than a human or a computer. People want a positive experience with a system that helps them remember what they don’t remember, and that forgets what they don’t want to remember. A system that remembers that you wanted to buy a ring for your wife but doesn’t say it out loud next to her, and reminds you in a more personal way. A system that remembers that your favorite show is back but doesn’t say it next to the kid because it’s not appropriate for their age.

A voice assistant that has Tact.

Being a dog voice assistant is probably the maximum voice assistants can be nowadays. It will progress but in the meantime, users will settle on something cute like Jibo that has some charm to it in case it makes a mistake and that can at least learn not to repeat it twice. If a mistake happened and for example, it said something to someone else, users will expect a report about things that got told to other users in the house. The Voice assistant should have some responsibility.

Mistakes can happen in privacy, but then we need to know about it before it is too late.

Using Big Data

The big promise of big data is that it could globally heal the world using our behavior. There is a growing rate of systems that are built to cope with the abundance of information. Whether they cope or not is still a question. It seems like many of these companies are in the business of collecting for the sake of selling. They actually don’t really know what to do with the data, they just want to have it in case that someone else might know what to do with it. Therefore I am not convinced that the voice assistant needs all the information that is being collected.

What if it saved just one day of your data or a week, would that be contextual enough?

Last year I was fascinated by a device called Kapture. It records everything around you at any give moment. But if you noticed something important happen you can tap it and it will save the previous 2 minutes. Saving things retrospectively, capturing moments that are magical before you even realized they were so, that’s incredible. You effortlessly collect data and you curate it while all the rest is gone. Leaving voice messages to yourself, writing notes, sending them to others, having a summary of your notes, what you cared about, what interested you, when do you save most. All of these scenarios could be the future. The problem it solved for me was, how can I capture something that is already gone whilst keeping my privacy intact.

Social privacy

People are obsessed with looking at their information the same as they are obsessed with looking in the mirror. It’s addictive, especially when it comes as a positive experience.

In social context the rule of “the more you give the more you get” works, but it suffers in software. Maybe at some point in the future it will change but nowadays software just don’t have the variability and personalization that is required to actually make life better for people who are more “online”. Overall the experience is more or less the same if you have 10 friends in Facebook or 1000. To be honest it’s probably worst if you have 1000 friends. The same applies to Twitter or Instagram. Imagine how Selena Gomez’s Instagram looks like. Do you think that someone in Instagram thought of that scenario, or gave her more tools to deal with it? Nope. It seems like companies talk about it but rarely do about it and it definitely applies to voice data collections.

It seems clear, the ratio of reveal doesn’t justify or power the result users get. One of the worst user experiences that can happen is for example signing into an app with Facebook. The user is led to a screen that requests them to grant access to everything…and in return they are promised they could write down notes with their voice. Does it has anything to do with their address, or their online friends, no. Information is too cheap nowadays and users got used to just press “agree” without reading. I hope we could standardize value for return while breaking down information in a right way.

Why do we have to be listened to every day and be documented if we can’t use it? Permissions should be flexible and we should incorporate a way to make the voice assistant stop listening when we don’t want them to listen. Leaving a room makes sense when we don’t want another person to listen to us, but how will that look like in a scenario in which the voice assistant is always with us? Should we tell it “stop listening for five minutes”?

Artificial intelligence in its terminology is related to a brain but maybe we should consider its usage or creation to be more related to a heart. Artificial Emotional Intelligence (A.E.I) could help us think of the assistant differently.

Use or be used?

How does it improve in our lives and what is the price we need to pay for it? In “Things I would like to do with my Voice Assistant” I talked about how useful some capabilities would be in comparison to how much data will this action need to become a reality.

So how far is the voice assistant from reading emotions, having tact and syncing with everything? Can this thing happen with taking care of privacy issues in mind? Does your assistant snitch on you, or tell you when someone was sniffing and asking weird questions? It’s not enough to choose methods like differentiated privacy to protect users. Companies should really consider the value of loyalty and creating a stronger bond between the machine and the human rather than the machine and the company that created it.

Further more into the future we can get to these scenarios:

There could also be some sort of behavioral understanding mechanism that mimics a new person that just met you in a pub. If you behave in a specific way the person will probably know how to react to you in a supportive way even though they didn’t knew you before. In the same way a computer that knows these kind of behaviors can react to you. Even more assuming there are sensors that tells it what’s your physical status and recognize face pattern and tone of voice.

Another good example are Doctors that many times can diagnose patients’ disease without looking at their full health history. Of course it’s easier to look at everything, but they would usually do that in case they need to figure out something that is not just simple. When things are simple it should be faster and in the tech’s case more private.

Summary

There are many ways to make Voice assistants more private whilst helping people trust them. It seems like no company has adopted this strategy yet. It might necessitate that this company would not rely on a business model that is driven by advertising. A company that creates something that is being released to the wild, a machine that becomes a friend that has a double duty for the company and the user, but one that is at least truthful and open about what it shares.

You must be logged in to post a comment.